Like I mentioned a few days ago in my previous post, I’ve been working on a new network library for my pet project. The interface turned out very similar to Boost Asio. I eventually spent a while studying how Boost Asio solves some of the problems, so it influenced my interface and some of the inner workings.

Here, I’m going to dissect a little helper class that I needed on my way to implement the equivalent to boost::asio::io_service::strand. Now, I know I can just use Boost instead of reinventing the wheel, but I already elaborated on that in the previous post.

So, let’s meet our little friend boost::asio::detail::call_stack (or my version of it), and see why you need it.

In some situations, your code needs to know if it is executing in the context of a particular function so it can make the right decisions. You can try to solve this in a couple of different ways.

First, consider we have the following imaginary class to helps us out with the sample code:

// Thread safe queue (Multi-producer/Multi-consumer)

template <typename T>

struct ThreadSafeQueue {

public:

// Add a new element

void push(T v);

// Retrieves an element from the queue, optionally blocking to wait for new

// elements if the queue is empty

// \return True if an element was retrieved, false if 'cancel' was called

bool pop(T& v, bool wait);

// Causes any current or future calls to 'pop' to finish with false

void cancel();

};

The problem

Suppose we have this class that allows us to enqueue work to be done at a later time.

class WorkQueue {

public:

void post(std::function<void()> w) {

m_work.push(w);

}

// Run enqueued handlers. "wait" causes it to keep processing work until

// "stop" is called

void run(bool wait) {

std::function<void()> w;

while (m_work.pop(w, wait)) {

w();

}

}

// Stops processing new work (causing "run" to return)

void stop() {

m_work.cancel();

}

// Some method that let us know if we are currently executing our run

// method

bool isInRun();

private:

// Some thread safe queue...

ThreadSafeQueue<std::function<void()>> m_work;

};

And then we have this work item, whose behavior depends if the current thread is currently executing isInRun or not.

// Some global work queue

WorkQueue globalWork;

void func1() {

// Code needs to behave differently if executing "run" on the globalWork

// instance at the moment

if (globalWork.isInRun()) {

// ...

} else {

// ..

}

}

int main() {

globalWork.post([]() { func1(); });

globalWork.run(false); // Execute any currently enqueued handlers

return 0;

}

How do we implement the WorkQueue::isInRun() method ?

The naive way

Maybe have a member variable tell us if we are currently inside WorkQueue::run ? Like this…

class WorkQueue {

public:

void run(bool wait) {

m_running++;

// ...

m_running--;

}

bool isInRun() const { return m_running > 0; }

private:

int m_running = 0;

};

Why not use bool m_running instead? So we can deal with recursion. And why would run be recursive? It calls unknown code (the handlers), and that code might do all kind of things the library didn’t account for. That’s also the reason why you should never hold locks while calling unknown code. It’s all too easy to end up with deadlocks.

So, is this solution usable? Not really. The only case where it would work (that I can think off), is if you only use a WorkQueue instance from one single thread. The problem is not even because m_running is unprotected.

Examples:

// This works correctly

void test1() {

globalWork.post([] {

func1(); // isInRun() returns true here

});

// isInRun() returns false here

func1();

// Explicitly run any handlers

globalWork.run(false);

}

// This doesn't work correctly

void test2() {

// Create worker thread to process work

auto th = std::thread([] { globalWork.run(true); });

globalWork.post([] {

// isInRun() returns true here

func1();

});

// WRONG behaviour: isInRun() returns true here.

// Even worse, it might return true or false depending if the worker thread

// is already running or not

func1();

// shutdown worker thread

globalWork.stop();

th.join();

}

The one size fits all way

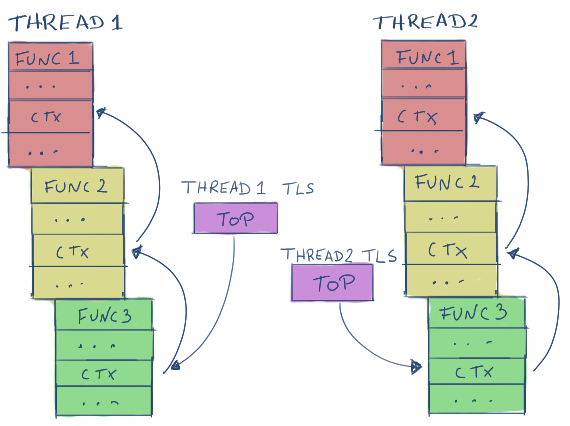

To fix this, our idea of “currently executing” needs to be tied to the thread’s callstack itself.

How? By placing markers in the callstack as we go, with each marker linking to the previous one. By making the most recently placed marker accessible through thread local storage (TLS), we can then iterate through all the markers in the current thread:

This is the implementation, extremely similar to Boost’s version. Main differences are the use of the now standard thread_local , and the possibility to iterate the callstack with a range based for loop .

template <typename Key, typename Value = unsigned char>

class Callstack {

public:

class Iterator;

class Context {

public:

Context(const Context&) = delete;

Context& operator=(const Context&) = delete;

explicit Context(Key* k)

: m_key(k), m_next(Callstack<Key, Value>::ms_top) {

m_val = reinterpret_cast<unsigned char*>(this);

Callstack<Key, Value>::ms_top = this;

}

Context(Key* k, Value& v)

: m_key(k), m_val(&v), m_next(Callstack<Key, Value>::ms_top) {

Callstack<Key, Value>::ms_top = this;

}

~Context() {

Callstack<Key, Value>::ms_top = m_next;

}

Key* getKey() {

return m_key;

}

Value* getValue() {

return m_val;

}

private:

friend class Callstack<Key, Value>;

friend class Callstack<Key, Value>::Iterator;

Key* m_key;

Value* m_val;

Context* m_next;

};

class Iterator {

public:

Iterator(Context* ctx) : m_ctx(ctx) {}

Iterator& operator++() {

if (m_ctx)

m_ctx = m_ctx->m_next;

return *this;

}

bool operator!=(const Iterator& other) {

return m_ctx != other.m_ctx;

}

Context* operator*() {

return m_ctx;

}

private:

Context* m_ctx;

};

// Determine if the specified owner is on the stack

// \return

// The address of the value if present, nullptr if not present

static Value* contains(const Key* k) {

Context* elem = ms_top;

while (elem) {

if (elem->m_key == k)

return elem->m_val;

elem = elem->m_next;

}

return nullptr;

}

static Iterator begin() {

return Iterator(ms_top);

}

static Iterator end() {

return Iterator(nullptr);

}

private:

static thread_local Context* ms_top;

};

template <typename Key, typename Value>

typename thread_local Callstack<Key, Value>::Context*

Callstack<Key, Value>::ms_top = nullptr;

Note that it's a templated class, which means that the following types are all distinct, and have their own linked list:

Callstack<Foo> Callstack<Foo, int> Callstack<Bar> Callstack<Bar,SomeData>

Advantages of this solution:

- Type safety. You can use whatever Key/Value types you want

- Every Key/Value type combination has their own linked list, and since in practice most code will only be interested in one particular Key/Value type combination, those checks only iterate through that linked list, thus giving us sightly better performance.

- Decouples the Callstack logic from the Key/Value types. As-in, no need to have a WorkQueue::isInRun method like it was proposed above.

- Useful for more things other than checking if we are executing a specific function. For example, you can use it to place markers with rich debug information. Then if the application detects an assert/error, it can iterate through those markers and log context rich information instead of just a callstack.

Usage examples

How to solve problems similar to the one presented by WorkQueue mentioned above:

struct Foo {

template <typename F>

void run(F f) {

// Place marker in the callstack, so any called code knows we are

// executing "run" on this Foo instance

Callstack<Foo>::Context ctx(this);

f();

}

};

Foo globalFoo;

void func1() {

printf("%s\n", Callstack<Foo>::contains(&globalFoo) ? "true" : "false");

}

int main() {

func1(); // Will print "false"

globalFoo.run(func1); // Will print "true"

return 0;

}

How to use for logging context rich information for asserts or fatal errors (in Debug builds).

struct DebugInfo {

int line;

const char* func;

std::string extra;

template <typename... Args>

DebugInfo(int line, const char* func, const char* fmt, Args... args)

: line(line), func(func) {

char buf[256];

sprintf(buf, fmt, args...);

extra = buf;

}

};

#ifdef NDEBUG

#define MARKER(fmt, ...) ((void)0)

#else

#define MARKER(fmt, ...) \

DebugInfo dbgInfo(__LINE__, __FUNCTION__, fmt, __VA_ARGS__); \

Callstack<DebugInfo>::Context dbgCtx(&dbgInfo);

#endif

void fatalError() {

printf("Something really bad happened.\n");

printf("What we were doing...\n");

for (auto ctx : Callstack<DebugInfo>())

printf("%s: %s\n", ctx->getKey()->func, ctx->getKey()->extra.c_str());

exit(1); // Kill the process

}

void func2(int a) {

MARKER("Doing something really useful with %d", a);

// .. Insert lots of useful code ...

// Something went wrong, lets trigger a fatal error

fatalError();

}

void func1(int a, const char* b) {

MARKER("Doing something really useful with %d,%s", a, b);

// .. Insert lots of useful code ...

func2(100);

}

int main() {

func1(1, "Crazygaze");

return 0;

}

Note that in this case the debug information placed in the stack doesn’t need to be anything related to the stack itself. You can make it as rich as you want, instead of the usual LINE/FUNCTION information.

In the next post, I’ll be dissecting my own variation of boost::asio::io_service::strand , which of course makes use of this Callstack class.

License

The source code in this article is licensed under the CC0 license, so feel free to copy, modify, share, do whatever you want with it.

No attribution is required, but I’ll be happy if you do.

I found that boost call_stack use

staticinstead ofthread_local. I wonder thatstaticms_top will be shared between each thread?Hi Hank,

Thanks for visiting. 🙂

The reason boost doesn’t use the thread_local keyword directly is because not all compilers will support it. Not sure if there are any that don’t support it nowadays. 🙂

I’d say any OS that allows multithreaded processes will provide their own API to implement the same functionality. See https://en.wikipedia.org/wiki/Thread-local_storage

For example, on Windows that is provided with this: https://learn.microsoft.com/en-us/windows/win32/procthread/using-thread-local-storage

In fact, I’m just looking right now at the latest version of boost (1.83), and I see it uses a “static tss_ptr<context> top_” in “call_stack.hpp“.

If you go and look at what that tss_ptr does, you can see that it tweaks the implementation based on what’s available (C++ compiler support and OS).

It actually ends up using thread_local if available.

In tss_ptr.hpp :

If thread_local is supported, it inherits from keyword_tss_ptr, which uses the thread_local keyword.

On Windows if thread_local is not supported it inherits from win_tss_ptr , which uses the Win32 API I mentioned above.

Ahaha actually right after I post this comment, when I get further into

tss_ptrI noticed thethread_localwas explicitly contained inside it.?But really thanks for your meticulous explaination! ?

By the way I have some more questions about the boost

strandimplementation which doult me a lot these days, I’ll illustrate it under that blog! ?If you’re talking about my Strands blog post, sure, go ahead.

It’s been over 7 years since I wrote that, so not sure it reflects the current state of boost, but it should help understand what strands do.

I would have to go refresh my memory too.

When I joined Epic Games I stopped using boost because the engine already has most things we needed.

I didn’t use boost at all for those 7 years, and only a few weeks ago I had to get back to it for my new job.